Within the project Data Quality Explored, we want to design a course that would address to both total beginners and learners with experience in programming and computer science. We want to keep a smooth transition between both levels in the course, to allow the interested learners to evolve from beginner to advanced level. One of the issue of this type of teaching is to create a content which is suitable for both groups, and adapt it to both levels of difficulty to match the learning goals of each. In our case, we need to find a single example task that can accommodate the benefits of the two learning groups.

Machine learning as a side step, preprocessing as the main focus

The topic of the course is data quality applied for machine learning: we use problems and datasets for machine learning models to compare the effects of differences in data quality on the performance of the models. The problem of data quality appears in a step called “preprocessing”, that comes before the development of the machine learning model. This step of preprocessing is the one we put the focus on in the course, and the machine learning task is only here as a verification step, to understand how changes in data quality can affect the performance of the machine learning model.

Finding a good example task to understand the significance of the learned methods

We want the learner to understand the work done on a real problem, giving meaning to the whole presented process. Examples have to be found, on the form of machine learning problems and their associated datasets. The first step of designing this course was to find the right problems to work on. We identified four main requirements for the ideal problem and the related dataset:

- The problem should be simple: as beginners are targeted by the course, we do not want the problem to be too complex to dig into. It should be straightforward for anybody and not require too much explanations. Also, we deal with machine learning models, but only as a side task: the problem should then be solvable by a simple machine learning model, that we can directly use as implemented by some machine learning libraries.

- The problem should be interesting: one of the main challenges of an online course without a real tutoring system is to catch the interest and keep the learner engaged and motivated along the course. In our case, we believe that having a good problem to work on would make this engagement much easier. In that sense, an ideal problem would be a problem that is currently a topic of research, for the learners to have a feeling of the importance of what they are learning. A real-world problem is also more attractive and allows for the creation of more side content, to broaden the interest on the topic of the example additionally to the technical content.

- The dataset should be open source: the course is openly available online and requires to use open source material.

- The dataset should be usable raw: we focus on the step of preprocessing of the data before a machine learning experiment. This step includes all the changes made on the dataset before it can be used for the experiment, so we need data that are usable without too many changes. This also allows the learners to work on a problem from the beginning to the end: if we were to deeply modify the dataset before being able to use it in our course, the learners lose all freedom to reproduce the experiments themselves from other open data they would find.

Our field of study

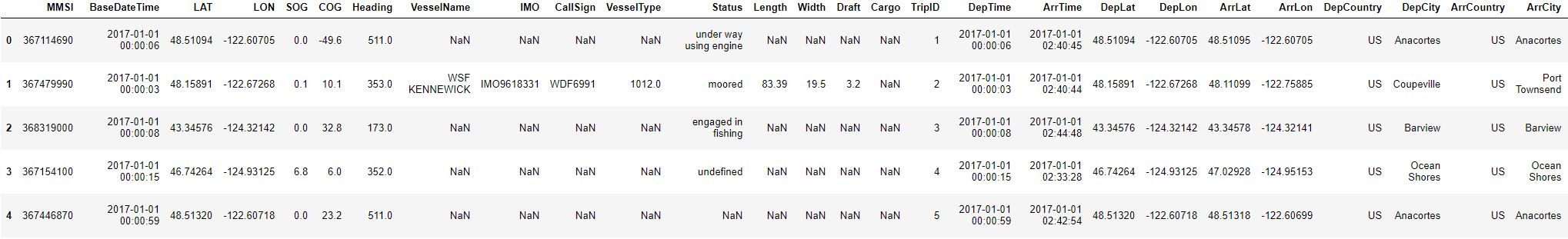

For the first chapter concerned by this issue, we already have limited our range of possibilities: we want a problem and a dataset that deals with mostly numerical data, and we want to use logistics data because we already have experience with it. Specifically, we previously worked with Automatic Identification System (AIS) data, spatio-temporal data collected from ships traveling at sea. The data contain the ship’s characteristics (identification code, name, destination, size, …) and specific data about the current trip (position, speed, orientation, …). We therefore looked for problems using AIS data, and openly accessible AIS datasets.

AIS data can be found openly from a few organisms, and they often differ on their format, so we had several options to look at (e.g.: U.S. Marine Cadastre, aprs.fi, sailwx.info). For the last two of these website, the download of the datasets is not very easy for a novice, so the best option is the open provider of the U.S. Marine Cadastre. Many more AIS providers can be found, but they are unfortunately all commercials.

The difficulty to find a fitting problem

As expected, the set of requirements presented earlier is not easy to combine into a usable problem and dataset. Finding a problem that is simple enough for the beginner level, and interesting with regards to its research significance is rather hard. Obviously, current research is not made of problems that are easy to solve. We could try to adapt a current research problem to an easier task, and building less efficient models, but even for that we need a more complex model than the easiest ones of the machine learning libraries. In our case, it is hard to create a very simple model with spatiotemporal data. Furthermore, for the specificity of the topic of data quality, we need the collected data to be as raw as possible.

We analyzed two problems:

- Trajectory prediction: this problem is a current topic of research, as it can prevent high risks at sea. Unfortunately, to give any result close to good, the prediction of future trajectory requires a model that is not as straightforward as desired, and it would be too hard to adapt to the beginner level.

- Prediction of estimated time of arrival: this was a problem we previously worked on, and knew very well. It is possible to create an easy solution with straightforward algorithms. However, the data need a lot of preprocessing work: we receive the AIS data as single messages that then need to be grouped into trips, and for a prediction of time of arrival with simple methods, we need all the ships to meet the same arrival point. The datasets we found contained only parts of trips and rarely arrived at the same destination.

Setting on a trade-off

To best ensure that the beginner learners are able to understand the core of the problem and the ways to solve it, it is necessary to settle down on tasks that are not a current hot research topic. As this could mean a less interesting content for advanced learners, we need to make sure that the tasks we give to solve are connected to the real world and plausible: this can be achieved with storytelling, by creating an engaging experience for the learner additionally to the pure academic knowledge.

In the end, the tasks we propose to solve are rather simple (predict the length of the ship from its width, predict the mean speed from the type of vessel, …), but we can coat them under a believable story: the learners are data scientists in charge of analyzing the AIS data they receive, and solving small problems for a coastal station that needs information about ships. We also have an introductory part about the data themselves, for the learner to be engaged in the topic of maritime logistics at the beginning of the course, and get more interest in the later solved problems.

In addition, we use other datasets in quizzes and practical tasks to ensure a variety in the topics treated and reduce the risk of boredom or weariness due to a single topic. The learners can also see other examples and understand that they can use their new knowledge in many different ways. For example, we introduce datasets of UFO sighting reports and wine quality analyzes. Each dataset can give room to imagination, for example, the students can imagine themselves as wine producters trying to improve the quality of their wine, or scientists from a Moon base trying to predict the arrival of aliens, …

Embedding those ideas into the next steps

The first chapter of the course will be tested at the TUHH internally in the summer semester of 2020 and we can have a first idea if the concept works by the summer. The chapter will be openly available in September / October 2020.

The next steps of development are the design of the next two chapters: image and text quality. We expect to follow the same idea of having a believable problem to work on and where learners can identify themselves as data scientists solving real problems.

Dieser Beitrag wurde verfasst von Anna Lainé.

Leave a Reply